The European Commission’s preliminary finding that TikTok addictive design breaches the Digital Services Act (DSA) is a huge change in how regulators view social media responsibility, especially when it comes to children and vulnerable users. This is not a symbolic warning. It is a direct challenge to the design choices that have powered TikTok’s explosive growth.

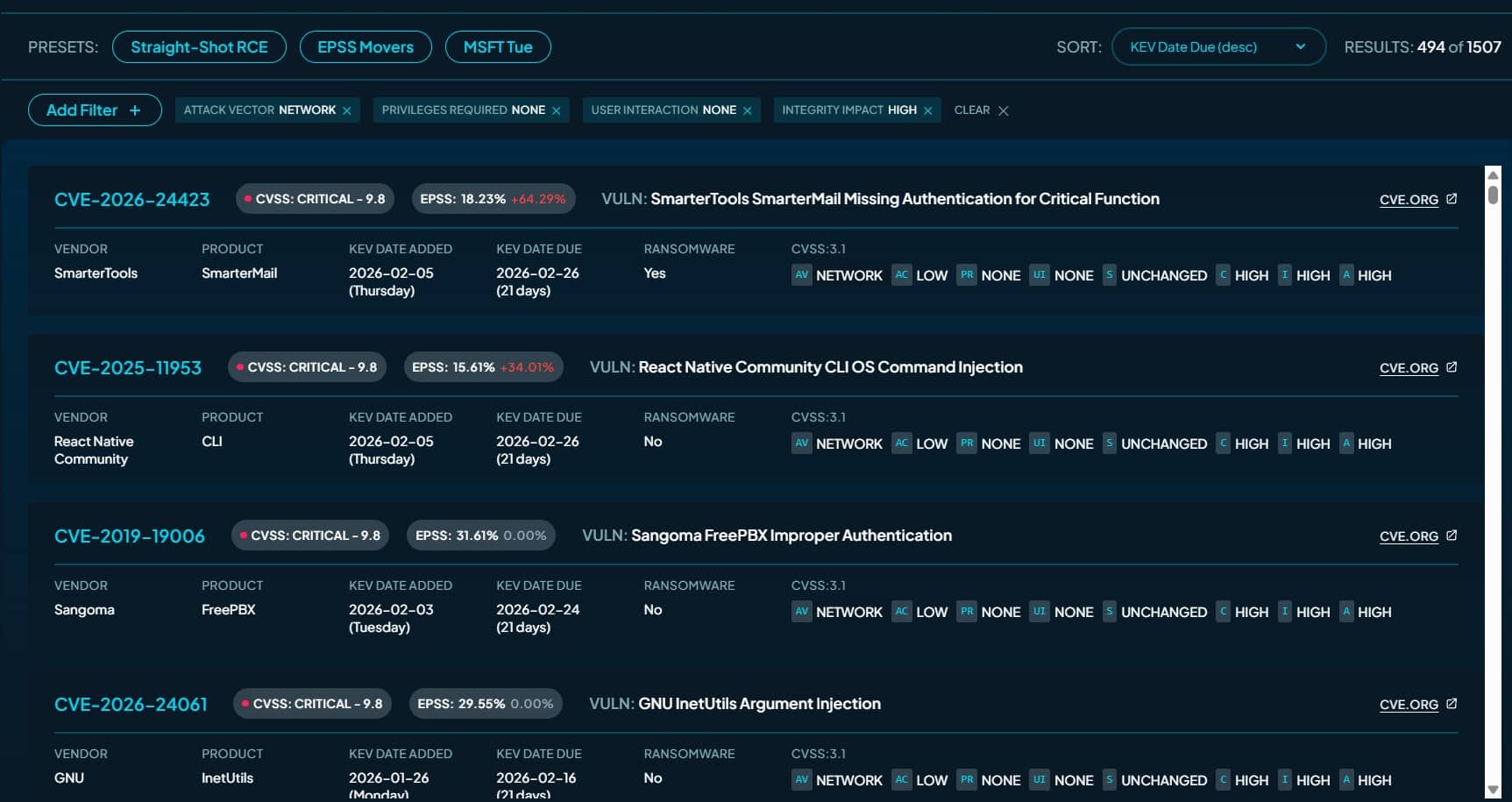

According to the Commission, TikTok’s core features—including infinite scroll, autoplay, push notifications, and a highly personalised recommender system—are engineered to keep users engaged for as long as possible. The problem, regulators argue, is that TikTok failed to seriously assess or mitigate the harm these features can cause, particularly to minors.

TikTok Addictive Design Fuels Compulsive Use

The Commission’s risk assessment found that TikTok did not adequately evaluate how its design impacts users’ physical and mental wellbeing. Features that constantly “reward” users with new content can push people into what experts describe as an “autopilot mode,” where scrolling becomes automatic rather than intentional.

Scientific research reviewed by the Commission links such design patterns to compulsive behaviour and reduced self-control. Despite this, TikTok reportedly overlooked key indicators of harmful use, including how much time minors spend on the app at night, how frequently users reopen the app, and other behavioural warning signs.

This omission matters. Under the Digital Services Act, platforms are expected not only to identify risks but to act on them. In this case, the Commission believes TikTok failed on both counts.

Risk Mitigation Measures Fall Short

The investigation also found that TikTok’s current safeguards do little to counter the risks created by its addictive design. Screen time management tools are reportedly easy to dismiss and introduce minimal friction, making them ineffective in helping users actually reduce usage.

Parental controls fare no better. While they exist, the Commission notes that they require extra time, effort, and technical understanding from parents, barriers that significantly limit their real-world impact.

At this stage, regulators believe that cosmetic fixes are not enough. The Commission has stated that TikTok may need to change the basic design of its service, including disabling infinite scroll over time, enforcing meaningful screen-time breaks (especially at night), and reworking its recommender system.

These findings are preliminary, but the message is clear: responsibility cannot be optional when a platform’s design actively shapes user behaviour.

How Governments View Social Media Harm

The scrutiny of TikTok addictive design comes amid a broader global reassessment of social media’s impact on young users. Countries including Australia, Spain, and the United Kingdom have taken steps in recent months to restrict or ban social media use by minors, citing growing concerns over screen time and mental health.

Europe’s stance reflects a wider regulatory trend: moving away from asking platforms to self-police, and toward enforcing accountability through law. This is consistent with other digital policy actions across the region, including investigations into platform transparency, data access for researchers, and online safety failures.

What Happens Next for TikTok

TikTok now has the right to review the Commission’s findings and respond in writing. The European Board for Digital Services will also be consulted. If the Commission ultimately confirms its position, it could issue a formal non-compliance decision, opening the door to fines of up to 6% of TikTok’s global annual turnover.

While the outcome is not yet final, the direction is unmistakable.

As Henna Virkkunen, Executive Vice-President for Tech Sovereignty, Security and Democracy, stated:

“Social media addiction can have detrimental effects on the developing minds of children and teens. The Digital Services Act makes platforms responsible for the effects they can have on their users. In Europe, we enforce our legislation to protect our children and our citizens online.”

The TikTok case is no longer just about one app. It is about whether growth-driven platform design can continue unchecked, or whether accountability is finally catching up.